Critical Crawler Issues

Frequently Asked Questions

What's Covered?

This guide offers an overview of the types of issues flagged in the Critical Crawler Issues section of your Site Crawl. It will also outline some steps to take to start investigating these issues.

Quick Links

Finding and Fixing 5xx, 4xx, and Redirect to 4xx Errors

What Are 5xx Errors?

5xx errors, like 500, 502 and 503, indicate that there is an Internal Server Error. This means that the site is serving a server error to our crawler, which may mean that human visitors can't access your site.

Finding 5xx Errors in Moz Pro Site Crawl

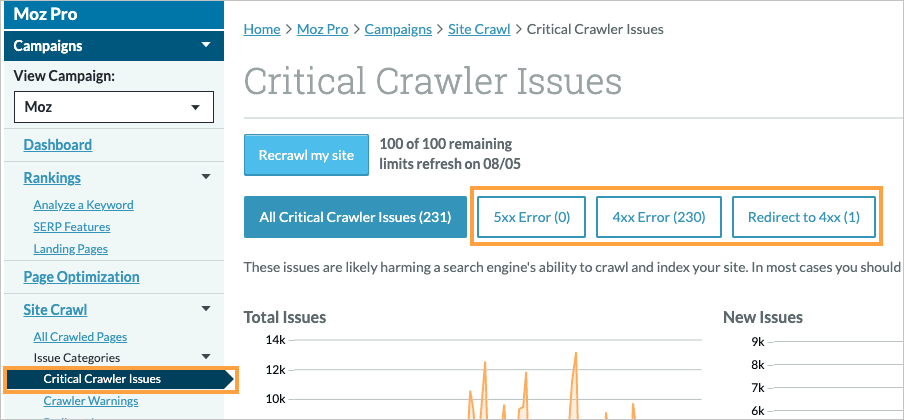

To find 5xx Errors in Moz Pro Site Crawl head to Site Crawl > Critical Crawler Issues > 5xx Error.

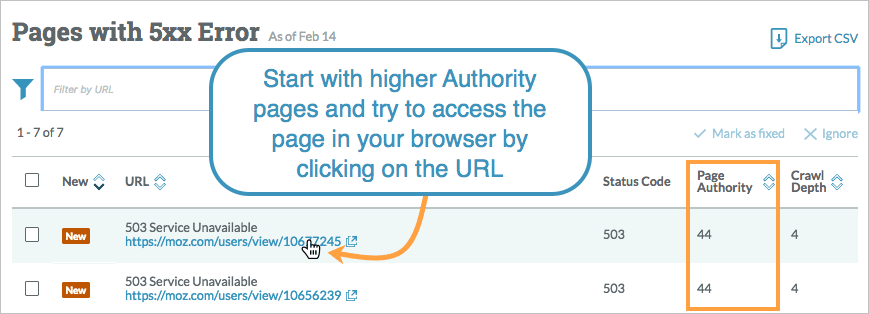

Start investigating by doing the following:

- Start by looking at your higher authority pages

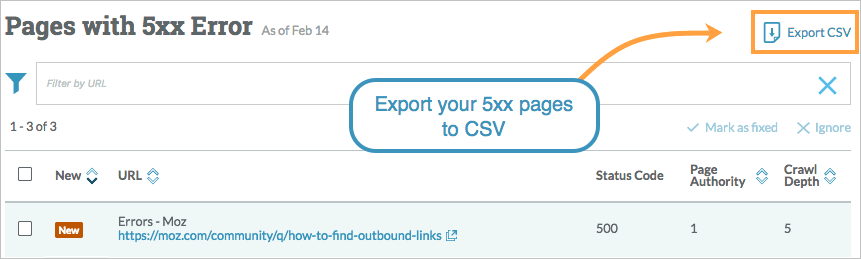

- Export the 5xx pages to CSV to keep a record, you can pick this up in your Export Notifications

- Open the URL in your browser to see if you can access the page

- Use a third party tool like https://httpstatus.io/ to see the current status of your page, this replicates our crawler accessing your site. Take a screenshot of the results to share with your website host.

This should give you an idea of how impactful the issue is to your visitors.

5xx issues can be resolved with your website host and may be intermittent or temporary. You may want to do the following:

- Check with your website administrator or host to see if they are blocking AWS, where our crawler rogerbot is hosted

- Ask your host if they are serving an error to our crawler due to too many requests from the same crawler. If this is the case you may want to tell rogerbot to slow down by using a crawl delay in your robots.txt

- Once you've taken action you can use the Recrawl button in Moz Pro to kick off a fresh crawl of your site. You'll need a Medium Moz Pro plan and above to use this feature.

What Are 4xx Errors?

4xx status codes are shown when a resource that cannot be reached or found. It may be that the page no longer exists or there may be an error with the link on your site (i.e. a formatting issue or a misspelled link).

Finding 4xx Errors In Moz Pro Site Crawl

To find 4xx Errors in Moz Pro Site Crawl head to Site Crawl > Critical Crawler Issues > 4xx Error.

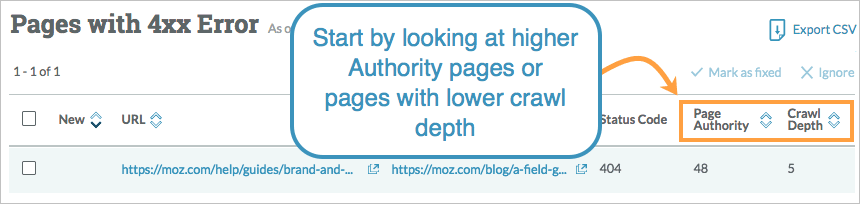

- Start by looking at high authority pages, or pages with a lower crawl depth

- Click on the headers in Moz Pro to sort the pages

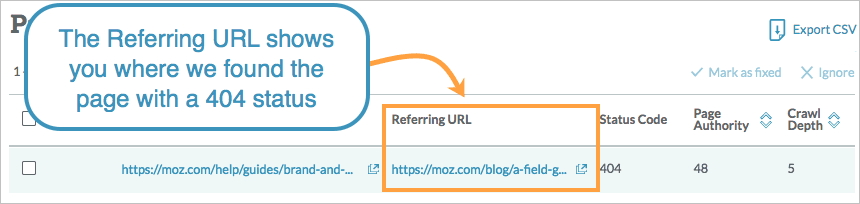

- Head to the Referral URL and search in the page source for the link to the 404 page

- Open up your page source code, using right click 'View Page Source', and search for the URL using the keyboard shortcut CMD(or CTRL) + F.

There are a few different options for fixing 404 issues depending on the reason for the error. You may want to do the following:

- Fix the link on the referring URL if the content still exists

- If the resource no longer exists then update the link on the referring URL to point to a relevant and working URL

- Add a 301 redirect to the 404 page to send traffic to a relevant resource on your site

- Ignore the error. Site owners accept that every site will have a few 404 errors, especially ecommerce sites. If you decide not to fix them, you may want to look at creating a custom 404 page to help visitors who land on this page find what they are after.

Learn more about ignoring issues or marking them as fixed

I have pages marked as 404s in Site Crawl but when I click on them, they resolve - what do I do?

If you are seeing pages as 404s but you’re able to access them in your browser, first try accessing the page via an incognito (or private browsing) window. Sometimes 404s will resolve for a website admin if they are logged into their CMS or admin site.

If the page still resolves in an incognito window, try running a recrawl for your site to see if the page continues to be flagged.

If you’re still having trouble after trying these steps, you can email our Help Team at help@moz.com with the URL of the page being flagged and they can take a look to see what information they can provide about the issue.

404s With %20 or %0A/percent Encoding

Our crawler uses percent encoding to parse the HTML in the source code, so any line breaks and spaces in your html links or sitemap links are converted to %0A and %20, causing a 404 error.

If this issue is originating from your sitemap, the correct formatting is for the closing and opening "loc" tags to be on the same line as the target URL, as shown in this resource: http://www.sitemaps.org/protocol.html

404s That Look Like Two URLs Joined Together

Some 404 pages show up in your site crawl report like this http://mysite.com/page/www.twitter.com/. This looks like two URLs connected together. This usually indicates that something in the source code of the referring page is incorrectly set up as a relative link. For your 404 pages, this means that somewhere in the source of these referring pages (found in the Referring URL column of the error table), you will find links missing the protocol (http:// or https://)

To fix this investigate how your links are set up on the referring page and add the http or https protocol.

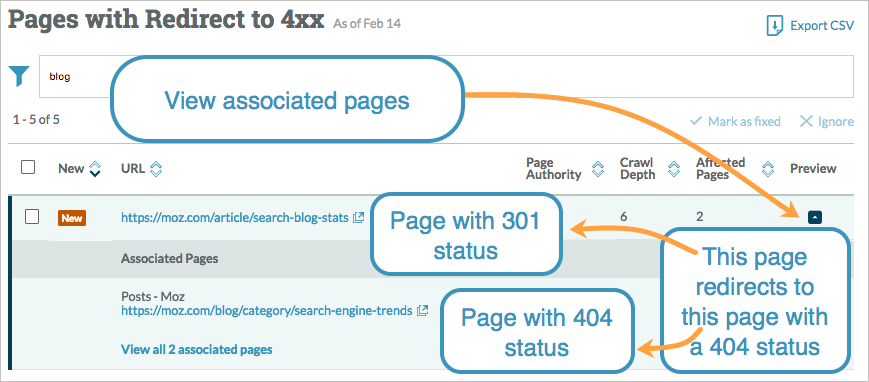

What is a Redirect to 4xx?

A Redirect to 4xx means that there is a page on your site that is being redirected to a page that’s not accessible. This can affect your visitors experience and can reduce crawler efficiency.

To find 4xx Errors in Moz Pro Site Crawl head to Site Crawl > Critical Crawler Issues > Redirect to 4xx.

- Start by looking at high authority pages, or pages with a lower crawl depth

- Click on the headers in Moz Pro to sort the pages

- Select the dropdown under Preview to view the associated pages

There are a few different options for fixing Redirect to 404 issues depending on the reason for the error. You may want to do the following:

- Change the redirect the point to an active and relevant resource

- Fix the 404 issue using the steps above

Once you've fixed this issue through your website CMS you can mark it as Fixed in Moz Pro and we'll check back next time we crawl.

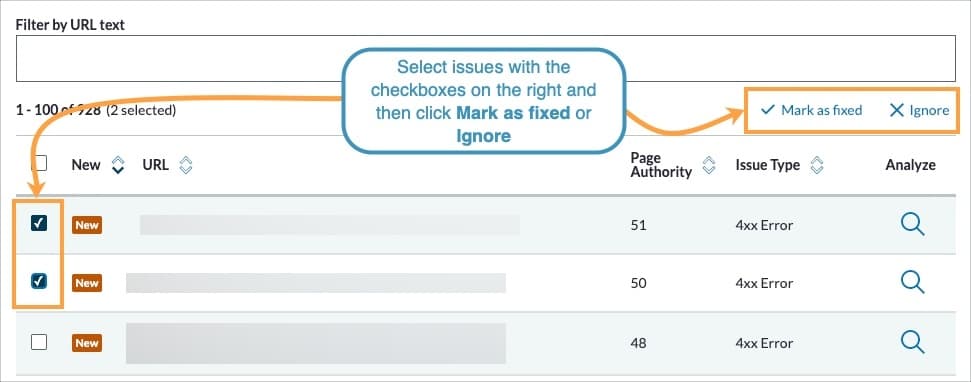

Tracking Issue Resolution Progress

As you work through issues flagged in your Campaign’s Site Crawl section you can track your progress by marking issues as fixed or by ignoring them.

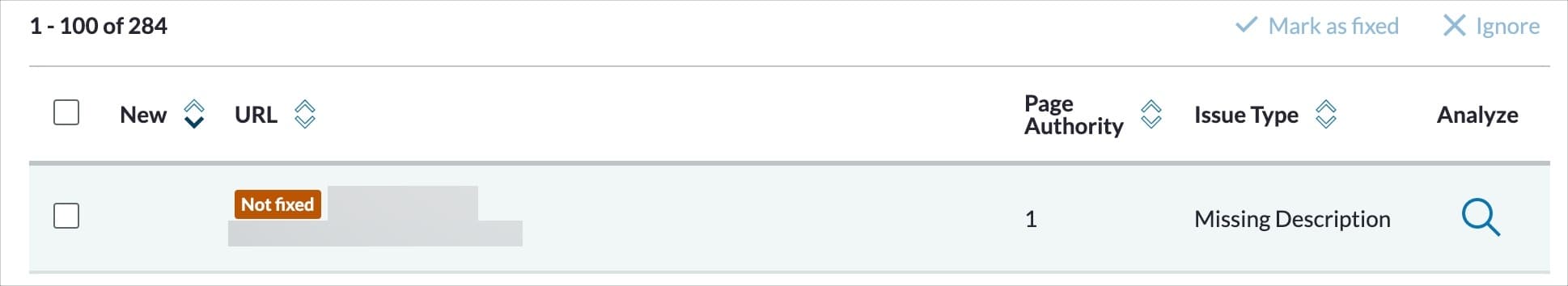

Mark as fixed: Marking an issue as Fixed will indicate to our crawler that you have taken action on your site to resolve the issue. During the next crawl of your site, the issue will be removed from the interface if the crawler does not encounter the same issue again. If the issue is still found it will be marked as Not fixed in your Site Crawl data.

Ignore: Marking an issue as Ignore will indicate to our crawler that you are aware of the issue but are not planning to take action to resolve it. The crawler will not include the issue in upcoming crawls as a result. You can Unignore issues at any time from your Campaign Settings.

To mark issues as Fixed or Ignore, select them using the checkboxes on the left and then clicking Mark as fixed or Ignore from the top right.

For issues marked as Fixed, if our crawler does not encounter those issues in the next crawl they will be removed from your list of site crawl issues. If the issue is still found, it will be marked as Not fixed.

For issues marked as Ignore, our crawler will not report those issues in your next crawl. They will be removed from your list of site crawl issues. You can Unignore issues at any time.

Related Articles

Was this article helpful?

![]() Yes! Amazing!

Yes! Amazing!

![]() Yes! It was what I needed.

Yes! It was what I needed.

![]() Meh. It wasn’t really what I was looking for.

Meh. It wasn’t really what I was looking for.

![]() No, it wasn’t helpful at all.

No, it wasn’t helpful at all.

Thanks for the feedback.